Last week I attended a series of information visualisation workshops run by Stephen Few. The classes were based around his three books to date: Show Me The Numbers, Information Dashboard Design and Now You See It. Here follows an overview of the material covered and my thoughts on the event.

Show Me The Numbers

Day one of the workshops was titled "Designing Tables and Graphs to Enlighten" and focused on how to effectively communicate a single story in a data set using a table or graph. The starting point was to highlight that the Gricean Maxims apply equally well to the communication of quantitative data as they do conversational communication, i.e. information visualisation should:

- be as informative as necessary but not more informative than necessary (maxim of quantity)

- not convey any message that is believed to be false or for which there is a lack of evidence (maxim of quality)

- be relevant (maxim of relation)

- be brief and orderly, avoiding ambiguity and obscurity of expression

Fundamentally, to achieve this, Stephen Few proposes 2 major steps. First, you should determine the medium that tells the story best. (Should you use a table or a graph? If a graph, then which kind of graph?) Then you must design the chosen component so that the story is told clearly.

For the first step, the relative strengths and weaknesses of tables and graphs and different graph types were presented. Regarding graphs, Stephen presented what he considers to be the 7 relationships commonly displayed in graphs - time-series, ranking, part-to-whole, deviation, distribution, correlation and nominal comparison - and which graph types most concisely convey these relationships. Unsurprisingly, pie charts were a graph type singled out for their general ineffectiveness (see Save The Pies For Dessert for details). In addition to graph types, the concept of small multiples was highlighted as a simple technique for improving the affordance of graphs that endeavour to compare across data that differs only along a single variable.

Oh, and 3D charts, where to begin... The almost guaranteed occlusion (where something is hidden behind something else) meaning it is impossible to see the entire picture at once? The inability to directly compare values, sizes and/or positions due to depth? The colour variation due to "lovely" lighting effects?

With determining the appropriate medium covered, a range of techniques and areas for consideration were introduced with the aim of maximising the effectiveness of the chosen component(s). Edward Tufte's argument that the "data-ink ratio" should be maximised by removing, de-emphasising and/or regularising the unnecessary and emphasising the most important was introduced as the foundation of good table and graph design. Additionally, the fundementals of visual perception were considered and how they can be harnessed to improve the clarity of the story being portrayed. In particular, attributes such as size, shape, orientation and colour are processed pre-attentively. That is, we perceive and process them instantaneously, in a highly parallelised fashion and without conscious thought, thereby laying down an immediate interpretation before conscious thought is required. Further to this, the pre-attentive attributes of length, 2-dimensional position, width, size and colour intensity are perceived quantitatively, i.e. some values are greater than others, and are therefore powerful building blocks for data visualisation.

As well as covering areas such as where best to locate the scale, how to arrange the visualisation to avoid label rotation and how to minimise problems associated with legends, there was an emphasis on the importance of colour, from when and where it is (not) required to palette choice. A fair amount of Stephen's thoughts on colour are covered in his article Rules for Using Color. A couple of useful links that came up were Cynthia Brewer's Color Brewer, a tool for colour palette choice, and VisCheck, a tool for simulating colour blindness.

Information Dashboard Design

The 2nd day of the workshops, "Dashboard Design for at-a-glance monitoring", built heavily on the first day's lessons and focused on techniques and constructs that are of use when designing dashboards. First up was the problem that the term "dashboard" is used for all manner of products. Stephen defines a dashboard as "a visual display of the most important information needed to achieve one or more objectives that has been consolidated on a single computer screen so it can be monitored and understood at a glance" and states that "dashboards are not comprehensive tools for analysis, decision making or management". The monitoring requirement suggests that to some extent there must be a "live" element to the screen, while their not being comprehensive tools suggests that there should not be an abundance of controls to facilitate filtering, etc. In my opinion Stephen is potentially limiting his audience with this, as most of what he presents under the context of dashboard design is just as valuable to report design and data presentation and/or analysis applications in general.

We started by focussing on the common mistakes made in dashboard design, such as introducing meaningless variety, arranging data poorly, misusing or overusing colour and introducing useless decoration. Unfortunately, many of these problems appear to stem from vendors. They are (understandably) assumed to be experts, yet their examples are usually little more than marketing fluff, so their designs are usually more concerned with graphical glitz than the actual intended purpose of a dashboard: communication.

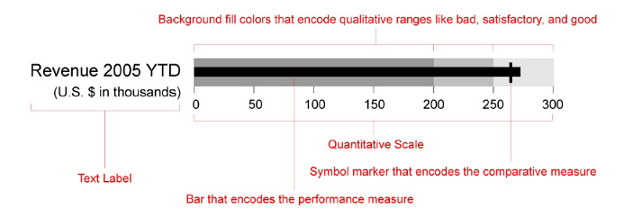

Moving on to considering the techniques and practices that will improve dashboard design, the core idea was once again Tufte's "data-ink ratio" argument: "reduce the non-data ink; enhance the data ink". A number of objectives specifically for the visual design of dashboards were also prescribed: eliminate clutter and distraction, group data into logical sections, highlight what's most important, support meaningful comparisons and discourage meaningless comparisons. Each of 6 categories of dashboard display mechanisms (graphs, icons, text, images, drawings and organisers) was considered with the aim of achieving these goals. The most time was spent addressing graphs; re-iterating some of the previous day's content and introducing a couple of graph types more specifically designed for the dashboard context: bullet graphs (see image below) and sparklines. Bullet graphs are Stephen Few's own development to address the requirement (unfortunately) usually fulfilled using gauges, i.e. the display of a single quantitative value against one or more comparative measures within the context of some qualitative ranges, e.g. poor, satisfactory, good. The benefits that bullet graphs have over gauges are numerous, but in particular they take up significantly less space and are more conducive to the aforementioned objectives. Sparklines, on the other hand, were introduced by Tufte in his book The Visual Display of Quantitative Information, and are designed to present trends or variations in a simple, space-saving way.

After touching on the importance of the aesthetics of the design (it should look appealing without impeding the aforementioned visual design objectives), we moved to critiqueing dashboard examples submitted by attendees. This proved to be a good exercise of the day's lessons both for the submitters and class as a whole.

Now You See It

The final day, titled "Simple Visualisation Techniques for Quantitative Analysis", was quite different to the preceeding days as it was focused on how to gain an understanding of and insights into data sets, rather than the communication of existing understanding. As a basis for the rest of the day's material, the power of sight compared to our other senses was introduced and used as the argument for why data visualisation is a vital tool for exploring and understanding data sets. This was followed by a brief history of data visualisation, from the first evidence of tabular arrangement of data in the 2nd Century, through William Playfair's invention of line charts, bar charts and pie charts, to the spread of home computers in the 1980s and beyond.

The traits of a skilled data analyst and characteristics of good data were considered before emphasising the necessity for a solid understanding of visual perception and cognition to be able to take advantage of those traits and characteristics through visual analysis. Consequently, some time was then spent introducing a number of core aspects of visual perception and cognition. As during the previous classes, it was highlighted that visual perception is selective, meaning that we must encode data in such a way that what is potentially interesting or meaningful pops out by contrasting with the norm. Pre-attentive visual attributes were again introduced as the means by which this can be achieved, with length, 2-dimensional position, width, size and colour intensity able to encode quantitative values and shape and colour hue ideal for indicating categorisation. The limitations of perception were highlighted, with particular emphasis on the issue of inattentional blindness (the fun examples caught most of us out). Finally, some attention was given to the meaningful characteristics of data: trends, patterns and outliers; how to highlight those characteristics; and, which patterns and comparisons in data are meaningful, e.g. steep vs gradual and random vs repeating.

The final stretch of the class focused on techniques for performing meaningful data analysis by attempting to bring the characteristics and patterns introduced in the preceeding material to the fore. First we considered the various types of useful analytical interactions, such as comparing, filtering, aggregating, drilling and zooming/panning. Specific analysis techniques were then introduced for each of 7 categories of data analysis: time series, ranking and part-to-whole, deviation, distribution, correlation, multivariate and geospatial. Stephen used a number of different analytical tools - Spotfire, Tableau and Panopticon - to demonstrate not only some of these techniques, but also the state of what he considers to be the best of the current crop of data analysis tools. This crossed over into discussion of what the ultimate data analysis tool would provide: a tool that provides the appropriate set of visualisations and aforementioned analytical interactions that has no distracting lag between seeing something, thinking about it and manipulating it.

Closing Thoughts

My overall reaction to the workshops is definitely positive. Stephen Few is an engaging presenter who is able to eloquently convey many practical techniques and principles for information visualisation. The workshops appropriately complement the corresponding books by presenting similar material in a different way, with the group exercises and interactivity of the classes more actively re-inforcing the concepts.

The minor negative I would highlight about both his books and the workshops is that they appear to overly target the business intelligence domain. This in itself is not a problem, but I think it potentially sells short some of the ideas and information in the material, as I believe they are potentially much further reaching. Many of the fundemental concepts he teaches are too frequently ignored or simply not appreciated by those producing even the most basic of data visualisations. Moreover, visualisation and visual perception are key to other areas such as user experience and human-computer interaction, so it is unsurprising that most of his lessons cross over to such areas.

For those who like the sound of this course, details of future events can be found here.