With non-relational database implementations (key-store, graph, etc.) entering the mainstream, the necessity has arisen to synchronise relational databases to their non-relational cousins. Furthermore, a non-relational data source may be fitted retrospectively to an existing RDBMS deployment to leverage the benefits of a non-relational schema with only minor 'integration disturbance' to the original code-base.

In this blog, I will outline an approach for adding a graph-based data store to an existing relational application through the use of a JQuery plugin, JSON-based MVC and JMS.

The existing application is a very basic content management system, to which I will be adding the ability for users to add comments (or yakkety yak!) to content.

In this way, the legacy 'entity' domain of the RDBMS is augmented by a 'comment' domain, held as a graph of nodes in a Neo4J Graph database.

The graph-based web-app, based on user comments, I have called 'Yakkety Yak' for obvious reasons.

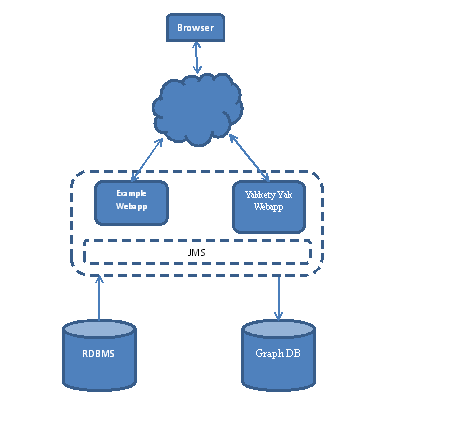

Lines, boxes and handwaving

The overall architecture of the solution is shown as a series of lines and boxes below. It is based around an application container in which two separate deployments are made - the first deployment is the vanilla database webapp. The second is the yakkety yak graph-based webapp.

The overall application has the following features:

- Orthogonality i.e. the two webapps must be able to exist independently of each other

- Synchronisation i.e. the two webapps must be able to communicate, despite their heterogeneous nature

Adding Comments to Content

As mentioned before, the existing application is a simple database-backed store of content that is rendered on a single page in the browser.

The back end is written in Java.

For example, the content entities are defined as sub-classes of a common base class as follows:

public class ExampleEntity extends AEntity {

private String content;

public String getContent() {

return content;

}

public void setContent(String content) {

this.content = content;

}

}The ID of each entity must be unique and is stored in the implementation of AEntity.

The entities are delivered using Spring MVC to the front-end (via a DAO, exampleEntityDAO):

@RequestMapping(value={"/", "*"}, method = RequestMethod.GET)

public ModelAndView getForm() {

final ModelAndView modelAndView = modelAndView("defaultView");

List entityList = exampleEntityDAO.getAll();

modelAndView.addObject(DEFAULT_MODEL_KEY, entityList);

return modelAndView;

}In order to allow users to comment on each item of content, we now require an 'Add Comment' link to be added by each item of content.

This can be achieved using a JQuery plugin that will detect the presence of a custom attribute i.e. data-contentKey

<td data-contentKey='$entity.id'>$entity.content</td>In this way, the unique id of the entity is 'embedded' in the HTML for future use.

The salient code from the JQuery plugin which creates the 'Add Comment' links is as follows:

function createLinks() {

$this.find('[' + dataContentKey + ']').each(function() {

$(this).append('<a href="#" class="' + addCommentLink + '"><img src="' + imageSrc + '" /></a>;');

});

};When rendered, this gives us the 'Add Comment' images as follows:

REST assured

So far so good. But how do we now enable comments to actually be added?

The answer to this is to expose our graph-database in a RESTful manner.

A concrete implementation of a REST Web service follows four basic design principles:

- Use HTTP methods explicitly.

- Be stateless.

- Expose directory structure-like URIs.

- Transfer XML, JavaScript Object Notation (JSON), or both.

So although our solution is not entirely stateless (since we have state held in the graph database), it will expose methods via HTTP and also allow use to view specific nodes using a well-known URL

For example to get per-content comments and make new comments, we expose the following methods in a JSON-enabled controller (i.e. servlet) in the graph domain:

@RequestMapping(value="getComments", method=RequestMethod.GET, headers = {"Accept=application/json"})

public @ResponseBody List getComments(@RequestParam(value = "contentKey", required = true) String contentKey) {

List commentList = commentDAO.getAllCommentsByContentKey(contentKey);

return commentList;

}

@RequestMapping(value="addComment", method = RequestMethod.POST, headers = {"Accept=application/json"})

public @ResponseBody void addComment(CommentFormModel commentFormModel) {

commentDAO.addUserCommentOnContent(commentFormModel.getUsername(), commentFormModel.getContentKey(), commentFormModel.getComment());

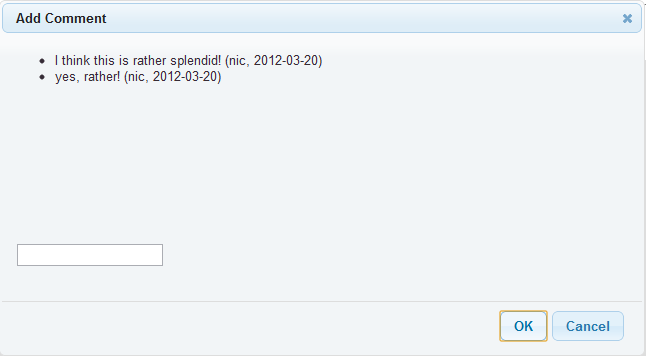

}These JSON-enabled methods are called from the dialog box that is part of our JQuery plugin (below).

When the comment is submitted, our new comment is stored in the graph-database.

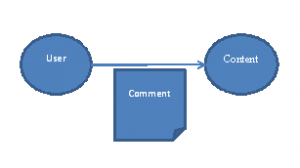

Under the covers, we have modelled the comment as a relationship that joins user nodes and content nodes, as follows:

Note that the relationship is uni-directional i.e. Users can make comments on Content but not vice versa

In terms of implementation, we can abstract away from the actual underlying graph store by using a DAO layer, for example:

public interface ICommentDAO extends IBaseDAO {

IComment getCommentById(String commentId) throws RelationshipNotFoundException;

void removeComment(String commentId) throws RelationshipNotFoundException;

void removeAllComments();

boolean hasComments(String contentKey);

void removeUserCommentsOnContent(String username, String contentKey) throws NodeNotFoundException;

List getAllCommentsByContentKey(String contentKey);

void addUserCommentOnContent(String username, String contentKey, String comment);

}Obviously, we are able to then expose this DAO via a web service or MVC presentation layer.

This approach is entirely orthogonal to the original RDBMS approach, since we can also use the ID of a content or comment node, to 'view' the node directly.

For example, the URL:

http://localhost:8080/yakketyyak/content/345345345would display the node with id 345345345 in the browser; in this case it displays the content node along with its concomitant comments.The important point to note is that this is outwith the existing RDBMS and we are displaying only data held in the graph database.

For example, we can display the comments using a JQuery JSON request:

Tell the world of the creation!

The next task is the non-trivial part - to fully synchronize the two data sources.

When content or users are added, we can implicitly add nodes, yet we would still need to be able to inform the (slave) data source of deletion events so that nodes (and their relationships) can be removed.

Integration can be achieved using JMS by generating messages whenever an entity is created or removed.

In the DAO Layer of the RDBMS domain we can inject a JMS message producer as follows:

public class AEntityDAO {

private IJMSProducer jmsProducer;

public AEntityDAO(IJMSProducer jmsProducer) {

this.jmsProducer = jmsProducer;

}

public IEntity addEntity(IEntity entity) {

// tell the world of the creation

getJmsProducer().sendEntityCreationMessage(entity.getClass().getCanonicalName(), entity.getId().toString(), entity.toMap());

return entity;

}

public IJMSProducer getJmsProducer() {

return jmsProducer;

}

}This ensures that any addEntity(...) event will generate a message on the JMS Queue, which can be subsequently consumed by a 'listener' in the graph-database domain.

The actual queue itself is configured within JBoss 7.1.x since it ships with the redoubtable HornetQ. A second remote queue is also configured since JBoss will allow a remote JNDI connection to a remotely-configured queue, which is ideal for testing.This set-up is configured in the standalone.xml file of JBoss as follows:

<jms-destinations>

<jms-queue name="testRemoteQueue">

<entry name="java:jboss/exported/jms/queue/test"/>

</jms-queue>

<jms-queue name="testLocalQueue">

<entry name="jms/queue/test"/>

</jms-queue>

</jms-destinations>Note - the initial context factory setting as used in the client code will be different for remote and local queue access. The remote setting is 'org.jboss.naming.remote.client.InitialContextFactory' whereas the local setting is 'org.jboss.as.naming.InitialContextFactory'. There is more on this topic in the references below or email me for more info.

Finally, we need to consume the JMS messages and act upon them. The JMSConsumer itself is beyond the scope of this blog, suffice to say it has the following API:

public interface IJMSConsumer {

IEntityActionMessage receiveMessage();

void resetConnection();

void close();

void setTimeout(long millis);

}Where the IEntityActionMessage is the interface for all messages being passed.

Crucially, it has a getContentKey() so that we can pass the unique ID of the entity that was created or deleted.

But for a given entity in the RDBMS domain (e.g. com.entity.ExampleEntity), how do we know how to map this to a DAO in the graph domain?

Mapping entities to nodes

The solution can be found in a callback mechanism for message handling.

The DAO layer in the graph domain is augmented with a new interface - IEntityToNodeMappingHandler - which handles the incoming messages from the JMS Consumer.

public interface IEntityToNodeMappingHandler {

String getMappedEntityFullyQualifiedClassName();

void handleMessage(IEntityActionMessage message);

}For example the content DAO implements the interface as follows:

/*

* Call-back method in IEntityToNodeMappingHandler

*/

public void handleMessage(IEntityActionMessage message) {

String contentKey = message.getContentKey();

String content = message.getEntityPropertyMap().get("content");

if (message instanceof CreateEntityMessage) {

addContent(contentKey, content);

} else if (message instanceof DeleteEntityMessage) {

try {

removeContent(contentKey);

} catch (NodeNotFoundException e) {

log.error("An error occurred.", e);

}

}

}

...

public String getMappedEntityFullyQualifiedClassName() {

return "com.example.entity.ExampleEntity";

}This new interface gives us a handle on the incoming IEntityActionMessage messages and also states explicitly which entity will be handled by the DAO.

The Mapping Dispatcher

When a DAO in the graph domain is autowired by Spring, a mapping dispatcher is also passed in as a constructor parameter. It is this code which performs the actual mapping from entity class to DAO.

For example, the Content DAO will register itself to handle all messages that originate from a specific class of entity, as follows:

mappingDispatcher.registerEntityToNodeMappingHandler(this);

All that remains is for us to specify the entity class in the relational domain when the JMS call originates.

So in the RDBMS domain, we generate messages as follows:

getJmsProducer().sendEntityCreationMessage(entity.getClass().getCanonicalName(), entity.getId().toString(), entity.toMap());

Here we can see that the first argument is the class of the entity, which then maps onto our content DAO. The second parameter is the entity id and the third is a map of entity properties i.e. the entity as a set of key/value pairs. Obviously this scheme only allows for string properties, but the scheme could easily be extended.

One obvious extension is to store binary data (i.e. an image) on a per-content basis, but this would probably require a slightly different back-end (maybe a scaleable solution like Apache Cassandra), but this may potentially be the subject of a further blog.

So we have now seen how we are able to act upon both creation and deletion events and keep the data sources in step.

Note - this is currently implemented as a one way master-slave relationship but the approach could be adapted to allow two-way communication.

Final thoughts

Graph databases are ideal for data that is a natural tree of nodes connected with relationships; both nodes and relationships may hold data.

In this example we have used the relatively trivial example of comments made by users on content, but the approach is valid for more complex data structures. For example, more complex trees with complicated traversal patterns. One good example is where we need to perform top-down or bottom-up graph traversals, for example, optimization problems such as financial portfolio optimization.

However, there are some obvious further extensions to this more simple example:

- Allow the storage of binary data when creating content nodes

- Add access restrictions through some kind of ACL or user-roles

- Extend the graph schema into a specific application domain by adding more specific node and relationship types

Credit and references

- Special thanks to Ben Mumford for development of the JQuery plugin

- JBoss 7.1.x

- Remote JBoss Client Using JNDI

- Neo4J graph database

- Gremlin Graph Traversal

- For a zip of the source code, please contact nhemley@scottlogic.co.uk