In the last post, I talked about and hopefully dispelled some of the myths about a career in testing. Here, I’ll go into a bit more depth about current and past projects I've worked on, hopefully giving some insight into how testers work at Scott Logic.

Since starting my current role in 2013, I’ve been involved in a few projects. In the first, I joined mid way through the application's development, but even joining late, I was able to shape future ways of working and promote a better way of testing. I also learnt a lot, using technologies in depth that I had only briefly used before. I've been able to take that knowledge and experience to the project I'm currently working on, where I became involved much earlier.

So what about some of those myths?

What do testers do, if it's not writing and executing scripts?

At Scott Logic, our testers are part of the development team and this is the case for my current project. I'm sat in the beating heart of the project team. We work with clients worldwide, although remotely from our UK-based offices. This makes communication a very important part of day to day work, from a morning scrum call, to conversing throughout the day with local or remote developers and business owners.

My current project works to a loose Scrum method with the aim of producing working software, rather than documentation, so I test within each sprint; as the development of features and fixes is finished, you are able to test them. By test, I mean "...the process of evaluating a product by learning about it through exploration and experimentation, which includes to some degree: questioning, study, modeling, observation, inference, etc."

Each test informs me of the current state of the software being developed and I’m able to use that information to generate new ideas and tests. I use various heuristics to uncover possible problems and I report on those problems either by creating bugs or talking to the right person, which goes to the heart of one of the values of the agile manifesto:

“individuals and interactions over process and tools”

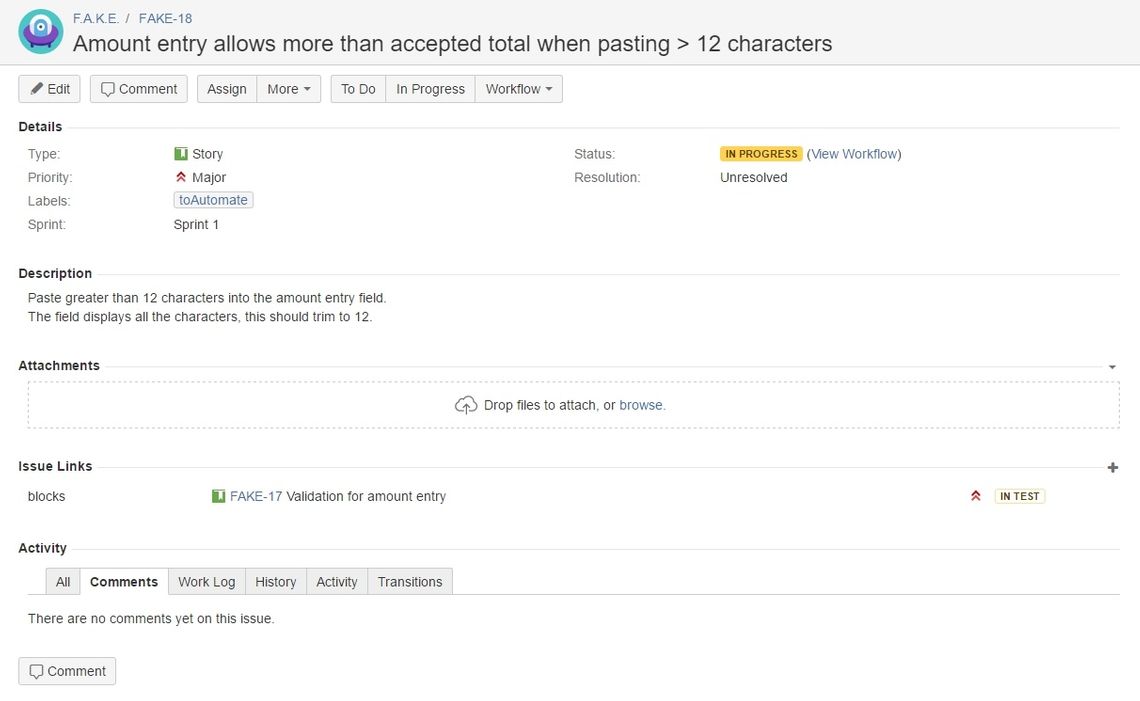

Of course, we do use process and tools. It's not a chaotic jumble of random stuff. We have a flow of developed features and bugs tracked in a JIRA board.

This gives a view of the current sprint and this enables me, as a tester, to plan and move quickly. We use a branching strategy that enables me to test new features and fixes in isolation or altogether in a test branch. This test branch will contain all the changes that have been merged into development but is only built when I'm ready to continue testing. This way, I get a stable environment to test against, rather than developers pushing changes to the development branch and impacting the environment without my immediate knowledge.

Where do I start?

Generally, I'll look at bug fixes first. They can often be quicker to validate and in most cases, don't have as great an impact as new features, so less problems are likely to be found. Of course, that isn't always the case, particularly if a bug fix fundamentally changes the behaviour of some functionality, or the code change is complex and wide ranging. In this case, a quick chat with the developer may be needed. However, working within the sprint, sitting in the project team, I’ve been able to get developers to note on the bug what impact this change is likely to have as a good approach for them to take, to make my, and their work easier.

Delving a little deeper

For new features, a more comprehensive look is needed. I often start with the acceptance criteria on the feature. Then, depending on what the new feature is, start exploring with some heuristics: SFDPOT, blink test, steeplechase, galumphing, creep and leap, tours or pair testing. I use my experience and knowledge of the application, and my lack of knowledge(!) in some cases, to spot patterns and check things I know have failed previously with similar features. For this, I keep a JIRA query of 'high risk failures'; bugs and features that I may want to keep re-checking regularly. I also use JIRA to mark features and bugs that I want to write automated checks for.

As we are using the Angular framework, this end-to-end automation is done using Protractor. And here is where I write scripts using JavaScript and the Jasmine notation, or more accurately, specs. Since starting on the project, this is something I work on intermittently but progressively, from setting up the small checks that ran initially, to the fully laid out framework of config files, page objects, helpers, data and specs that we have now.

Since my first project, we have hired more great testers and we work with the same ethos:

"Creatively and with context".

The work certainly isn't boring; it can be technically challenging and as for respect, well, ask any of our developers or clients that we’ve provided testers for.