Apache Spark has now reached version 3.5.1, but what if you are still using a 2.4.x version? 2.4.8 went out of support in May 2021, so upgrading is strongly advised.

If you go through the pain of updating to the latest version, what do you gain?

-

Apache Spark SQL has gone through a major evolution, now supporting ANSI SQL, and adding many new features and making many performance improvements.

-

A great deal of new functionality has been added in the Python and PySpark areas. In particular, Pandas API on Spark gives you a tuned distributed version of pandas in the Spark environment.

-

Streaming has gained a number of functional and performance enhancements.

-

The addition of support for NumPy and PyTorch aids machine learning tasks and the distributed training of deep learning models.

Improvements to Apache Spark SQL

Apache Spark’s SQL functionality is a major part of the platform, being one of the two main ways of manipulating data. The 3.x releases have seen a major shift to support ANSI SQL, with even Spark specific SQL being aligned as closely as possible with ANSI standards.

Performance has been enhanced with a new Adaptive Query Execution (AQE) framework. Features include better execution plans with re-optimization based on runtime statistics and optimization of query planning. There are better adaptive optimizations of shuffle partitions, join strategies and skew joins. Dynamic partition pruning removes unused partitions from joins, reducing the volume of data. Finally, Parquet complex types, such as lists, maps, and arrays are now supported.

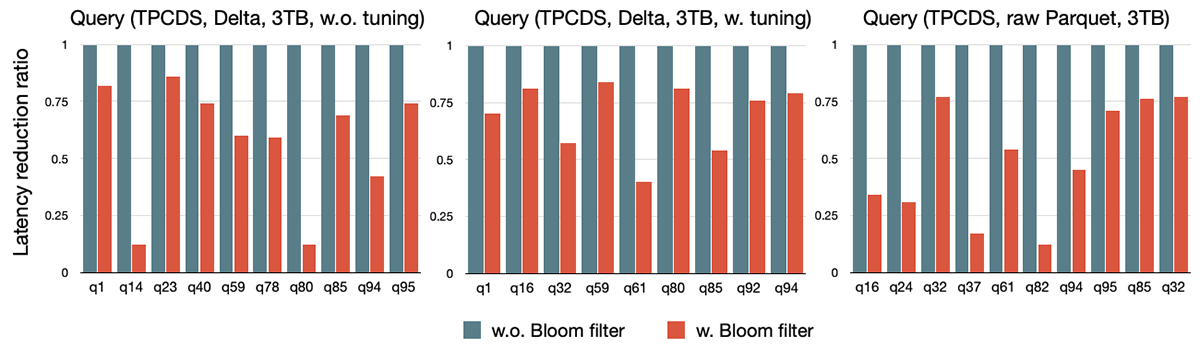

Various optimizations push filtering of data out to data sources or earlier in the pipeline, reducing the amount of data scanned and processed in Apache Spark. For instance, injecting Bloom filters and pushing them down the query plan, gave a claimed ~10x speedup on a TPC-DS benchmark for untuned Delta Lake sources and Parquet files.

(source)

(source)

There are a large number of improvements to support developers and increase functionality. SQL plans are presented in a simpler and structured format, aiding interpretability. Error handling has been improved, with runtime errors returned instead of NULLs, and with explicit error classes indicating the type and location of the error. In addition, errors now contain industry standard SQLSTATE error codes.

Developers are aided by better compile time checks on type casting. Parameterized SQL queries make queries more reusable and more secure. New operations such as UNPIVOT and OFFSET have been added along with new linear regression and statistical functions. In addition, FROM clauses can now use Table-valued Functions (TVF) and User Defined Functions (UDF), enhancing the capabilities of SQL syntax. Over 150 SQL functions have been added to the Scala, Python and R APIs, removing the need to specify them using error-prone string literals.

Enhancements to Python and PySpark functionality

Python and PySpark have been a major focus of Apache Spark development. In V3.1, Project Zen began an ongoing process of making PySpark more usable, more pythonic, and more interoperable with other libraries. The developer experience has been enhanced with better type hints and autocompletion, improved error handling and error messages, additional dependency management options (with Conda, virtualenv, and Pex supported) and better documentation.

Possibly the most significant change is with the Pandas API on Spark, added to Spark V3.2. This is a distributed re-implementation of the pandas data analysis library, allowing the workload to be delegated across multiple nodes instead of being restricted to a single machine. This provides improved performance, with execution speed scaling nearly linearly with cluster size. Each new version has brought further optimizations.

As the 3.x versions have progressed, the Pandas API on Spark has implemented more and more of the full pandas API (though if required a Pandas API on Spark Dataframe can be converted to a pandas DataFrame, at the cost of being restricted to a single machine’s processing power and memory). One difference from pandas is the use of plotly for interactive data visualization, instead of static visualization with matplotlib.

New DataFrame equality test functions, with detailed error messages indicating diffs, help with ensuring code quality. A profiler for Python and pandas User Defined Functions helps with fixing performance and memory issues.

NumPy support brings powerful and efficient array functionality to Apache Spark, something of particular use to ML users.

Streaming advances

Streaming functionality has been enhanced, with stream-stream joins being filled out with full outer and left semi joins and with new streaming table APIs. RocksDB is now used for state stores, giving better scalability as size is no longer limited to the heap size of the executors. In addition, fine-grained memory management enables a cap on total memory usage across RocksDB instances in an executor process.

Stateful operations ( aggregation, deduplication, stream-stream joins ) can now be used multiple times in the same query, including chained time window aggregations. This removes the need to create multiple queries with intermediate storage, reducing cost and increasing performance. In addition, stateful processing functions can now be defined using Python, not just Java or Scala.

Various enhancements give improved performance, including native Protobuf support.

Machine learning and AI gain support

Apache Spark adding increased support for machine learning and AI is to be expected, both because of the current buzz, but also because they are a natural fit to Spark’s functionality.

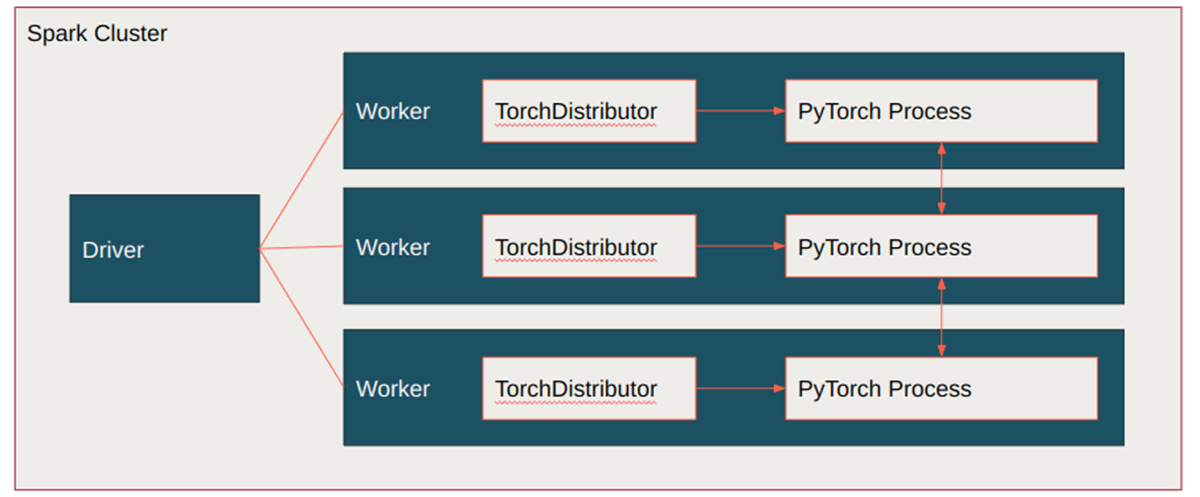

TorchDistributor provides native support in PySpark for PyTorch, which enables distributed training of deep learning models on Spark clusters. It initiates the PyTorch processes and delegates the management of distribution mechanisms to PyTorch, overseeing the coordination of these processes.

(source)

(source)

TorchDistributor is simple to use, with a few main parameters to consider:

from pyspark.ml.torch.distributor import TorchDistributor

model = TorchDistributor(

num_processes=2,

local_mode=True,

use_gpu=True,

).run(<function_or_script>, <args>)

Keeping up with the trend for LLM supported coding, the English SDK for Apache Spark takes English instructions and compiles them into PySpark objects such as DataFrames. Generative AI transforms the English instructions into PySpark code.

For example:

best_selling_df = df.ai.transform("What are the best-selling and the second best-selling products in every category?")

Spark Connect

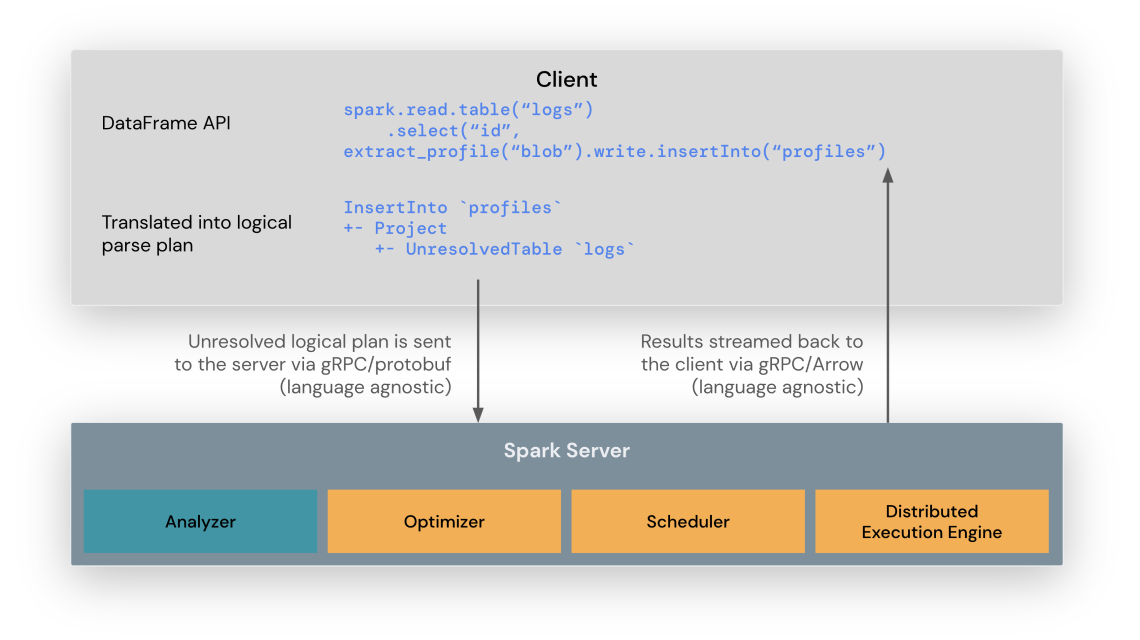

Spark Connect, introduced in v3.4.0, is a protocol designed to streamline communication with Spark Drivers.

The Spark Connect client library translates DataFrame operations into unresolved logical query plans which are encoded using protocol buffers. These are sent to the server using the gRPC framework.

Here’s how Spark Connect works at a high level:

(from the official documentation found here)

- A connection is established between the Client and Spark Server

- The Client converts a DataFrame query to an unresolved logical plan that describes the intent of the operation rather than how it should be executed

- The unresolved logical plan is encoded and sent to the Spark Server

- The Spark Server optimises and executes the query

- The Spark Server sends the results back to the Client

(source)

(source)

Other areas of improvement

The Apache Spark operations experience has been upgraded through new UIs, for instance for Structured Streaming, more observable metrics, aggregate statistics for streaming query jobs, detailed statistics about streaming queries, and more.

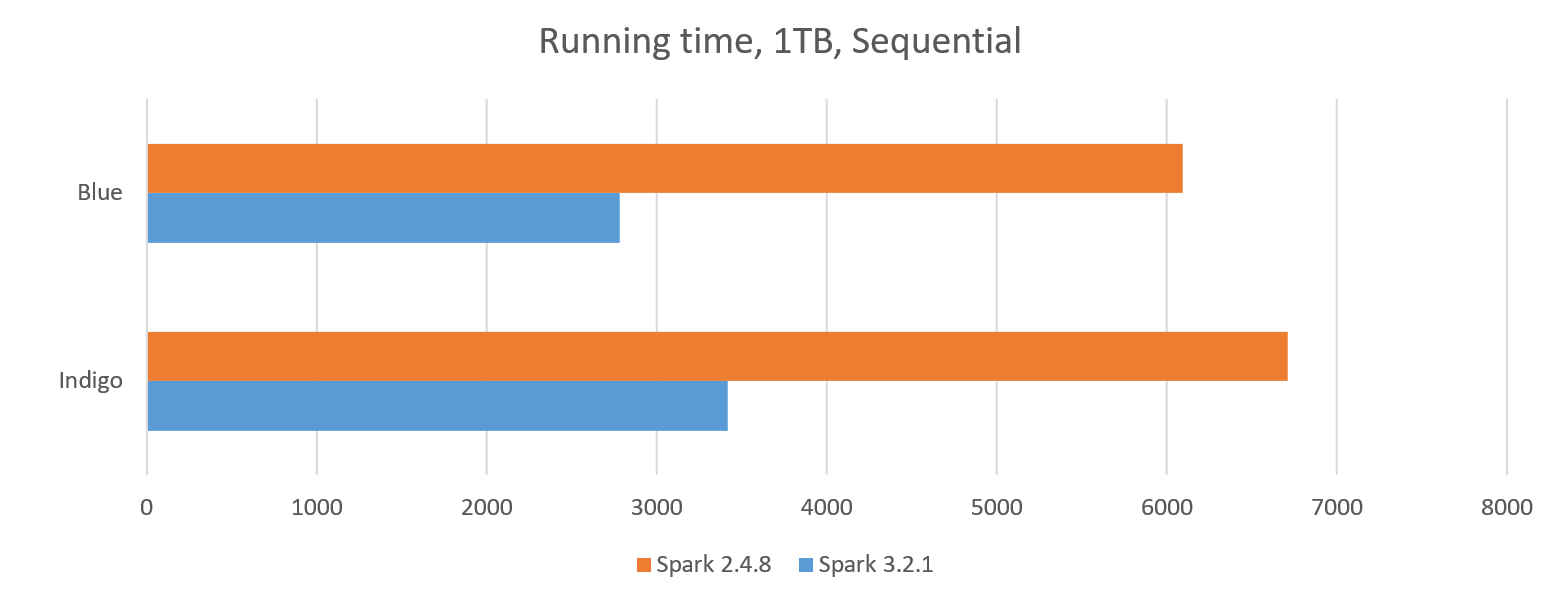

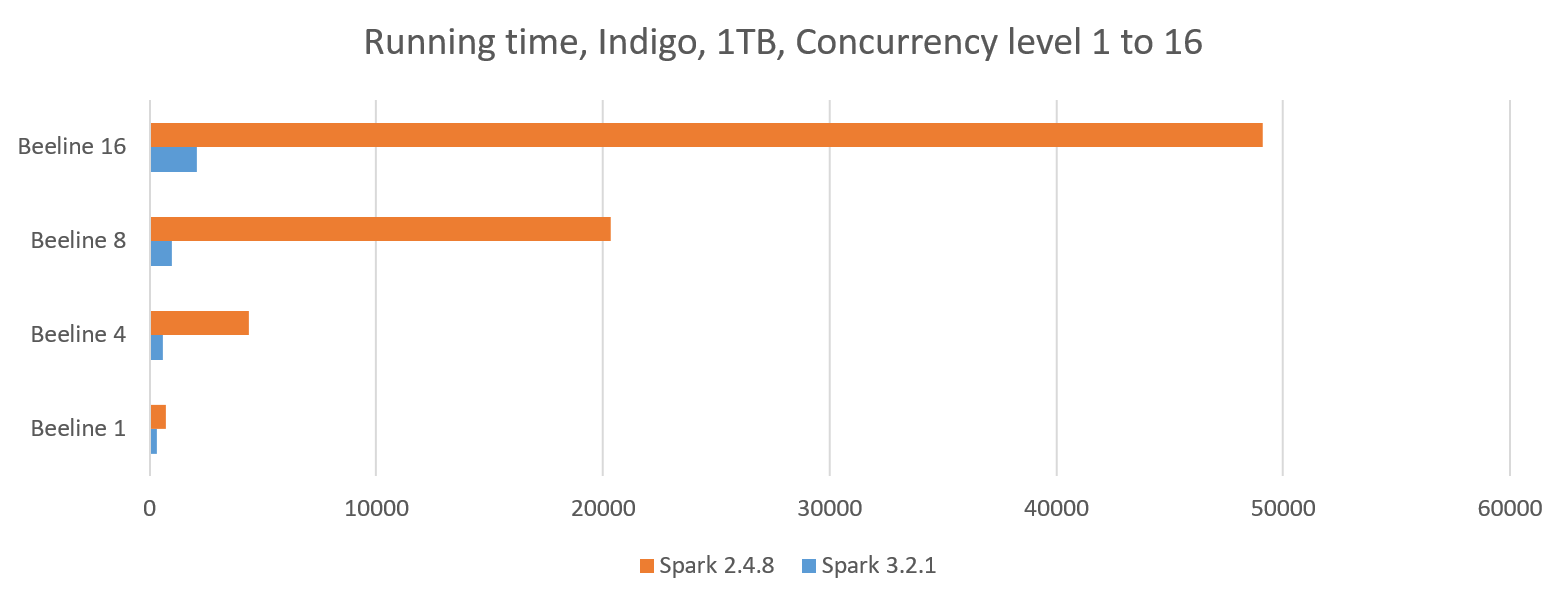

We did not find any benchmarks directly comparing performance between Apache Spark 2.4.x and 3.5.x. However, we did find this post by DataMonad running a TPC-DS benchmark, which shows significant speedups going from Spark 2.4.8 and 3.2.1, particularly when running concurrent queries.

In a sequential test, Spark 3.2.1 performed about twice as fast as Spark 2.4.8.

(source)

(source)

For a parallel test, Spark 3.2.1 ran up to 16 times faster than Spark 2.4.8.

(source)

(source)

Cloud Platform Support

If you are running your own Apache Spark cluster, either on-premise or in a cloud hosted VM, then you can choose whatever version is available to you. However, if you want to run in a more Spark-as-a-Service mode, what do the major cloud providers offer you?

Amazon claims that their EMR runtime for Apache Spark is up to three times faster than clusters not using EMR. However, if you choose EMR, you get the single version of Apache Spark supported in that environment, which is currently 3.5.0.

Microsoft Azure HDInsight is more trailing edge than leading edge. HDInsight V4.0 provides Apache Spark V2.4, whose basic support ended in February of 2024. HDInsight V5.0 supports Apache Spark V3.1.3, which was released in February of 2022. Meanwhile, HDInsight V5.1 supports the more recent Apache Spark V3.3.1, released in October of 2022.

Google offers GCP Dataproc, with serverless Spark support. The oldest supported Apache Spark runtime is V3.3.2, with the default being V3.3.4. The latest V3.5.1 is also offered.

An alternative, though possibly more expensive route, is to use Databricks’ offerings on the main cloud providers. These give you a choice of the most recent versions of Apache Spark.

Deprecations

Here are the library versions and runtime changes that have occurred since Spark v2.4.6.

| 3.0.0 |

|

| 3.1.1 |

|

| 3.2.0 |

|

| 3.5.0 |

Upcoming Removals The following features will be removed in the next Spark major release

|

Resolved Issues

Here are the resolved issues that have been implemented for each minor release since Spark v2.4.6.

| 3.0.0 | 3,400 tickets resolved |

| 3.1.1 | 1,500 tickets resolved |

| 3.2.0 | 1,700 tickets resolved |

| 3.3.0 | 1,600 tickets resolved |

| 3.4.0 | 2,600 tickets resolved |

| 3.5.0 | 1,300 tickets resolved |

To Conclude

Migrating software to newer versions is always daunting, but moving on from out of support versions is a crucial process.

As seen above, there are relatively few deprecations. A migration guide is provided for Apache Spark and includes a number of configuration switches to retain legacy behaviours, easing the migration process.

In return for the pain, you gain access to a great deal of new functionality, in all areas, and significant performance improvements.

More Information

The latest Apache Spark documentation can be found

here.

The documentation for the last 2.x version (2.4.8) is here.

Release notes can be accessed from the

Apache Spark News index.

The Apache Spark migration guide is provided

here.

Databricks has useful summaries of each Apache Spark 3.x version.

Apache Spark 3.0

Apache Spark 3.1

Apache Spark 3.2

Apache Spark 3.3

Apache Spark 3.4

Apache Spark 3.5