Testing an Event Ingestion Service: A Comprehensive Approach

Introduction

Event-driven architectures are the backbone of scalable, responsive distributed systems. Central to such systems is an ingestion service that receives, processes, and routes event messages. In this blog post, we’ll dive into how we tested an ingestion service integrated with a cloud-based event hub, covering our strategies across multiple environments, including Development (DEV), Test (TEST), and Integration (INT). This post details our testing approach, tools, environments, and the ingestion process flow, complete with a diagram.

Overview of the Ingestion Service

The ingestion service serves as a gateway for event messages, accepting data from external producers via a cloud-based event hub, and routing it to downstream systems. We used a managed cloud event hub service for its scalability and reliability, connecting to it via the cloud provider’s SDK to send messages programmatically. The service was designed to:

- Receive event messages in formats like JSON.

- Validate messages for schema compliance and data integrity.

- Route valid messages to the service for downstream processing.

Test Environments

To ensure the ingestion service performed reliably across different scenarios, we conducted testing in three distinct environments:

- Development (DEV) Environment: Used for early-stage testing and development. The upstream system was not connected to the event hub, allowing isolated testing without external interference.

- Test (TEST) Environment: A controlled environment for automated testing, also disconnected from the upstream system to avoid disruptions during test execution.

- Integration (INT) Environment: A near-production setup where the upstream system was fully connected to the event hub, enabling end-to-end (E2E) testing with real-world data flows.

Each environment played a critical role in validating the service’s functionality, performance, and reliability.

Testing Strategy

Our testing strategy combined automated and manual testing to cover functional, performance, reliability, and integration aspects. Below, we outline the approach for each.

1. Functional Testing

Functional tests verified the core capabilities of the ingestion service:

- Message Reception: Ensured the service could handle expected message formats, such as JSON payloads with defined schemas.

- Validation: Confirmed proper validation of message schemas, rejecting invalid or malformed data (e.g., incorrect data types or missing required fields).

- Routing: Validated that messages were correctly forwarded to the downstream service without data loss or corruption.

Automated Testing (DEV and TEST): In DEV and TEST environments, we used the cloud provider’s SDK to send programmatically generated messages to their respective event hubs, including valid payloads, malformed data, and edge cases (e.g., oversized messages or missing fields). Automated scripts validated error handling, schema compliance, and routing logic, ensuring the service behaved as expected under controlled conditions.

Manual Testing (INT): In the INT environment, we relied on the upstream system’s message generation mechanism to send messages to the event hub, simulating production-like data flows. This approach tested schema compatibility with real-world data from the upstream system, revealing schema mismatches such as missing fields or incorrect data types. These issues were resolved by updating the service’s validation logic to align with the upstream system’s message structure.

2. Performance Testing

Performance testing ensured the service could handle high message volumes efficiently.

Automated Testing (DEV and TEST):

- Load Testing: Sent thousands of messages per second using the SDK to measure throughput and latency.

- Stress Testing: Pushed the service beyond expected capacity to identify bottlenecks.

- Scalability Testing: Added service instances to verify horizontal scaling.

Manual Testing (INT): In the INT environment, we sent large batches of messages to simulate peak loads, measuring processing speed and queue backlog. These tests confirmed the service’s ability to handle real-world traffic from the upstream system.

3. Reliability Testing

Reliability tests validated the service’s resilience under failure conditions.

Automated Testing (DEV and TEST):

- Fault Tolerance: Simulated network failures and service restarts to test recovery mechanisms.

- Idempotency: Sent duplicate messages to ensure they were handled without causing downstream issues.

- Data Integrity: Verified no messages were lost or corrupted during processing.

Manual Testing (INT): In the INT environment, we tested recoverability by sending messages while the event hub was temporarily down. Once the hub was restored, we confirmed the ingestion service resumed processing from the last checkpoint, ensuring no data loss.

4. Integration Testing

Integration testing focused on E2E validation in the INT environment, where the upstream system was connected to the event hub. Key tests included:

- Schema Validation: Verified that message schemas from the upstream system matched the ingestion service’s expectations. We identified and fixed issues like mismatched field names and data types.

- E2E Flow: Sent messages from the upstream system, through the event hub, to the ingestion service, and finally to downstream consumers, ensuring seamless data flow.

- Performance Under Load: Conducted batch message tests to measure end-to-end latency and throughput in a production-like setting.

Tools and Technologies

We used the following tools to support testing:

- Cloud Provider’s SDK: Enabled programmatic message sending to the event hub, used in both automated and manual tests in DEV and TEST environments.

- Cloud Event Hub: A scalable, managed service for event streaming.

- Monitoring Tools: Cloud-native solutions tracked metrics like latency, throughput, and error rates.

- Custom Scripts: Python scripts leveraging the SDK automated message generation and fault injection in DEV and TEST environments.

- Manual Testing Tools: In the INT environment, the upstream system’s message generation mechanism was used alongside cloud provider consoles for test execution and monitoring.

Ingestion Process Flow

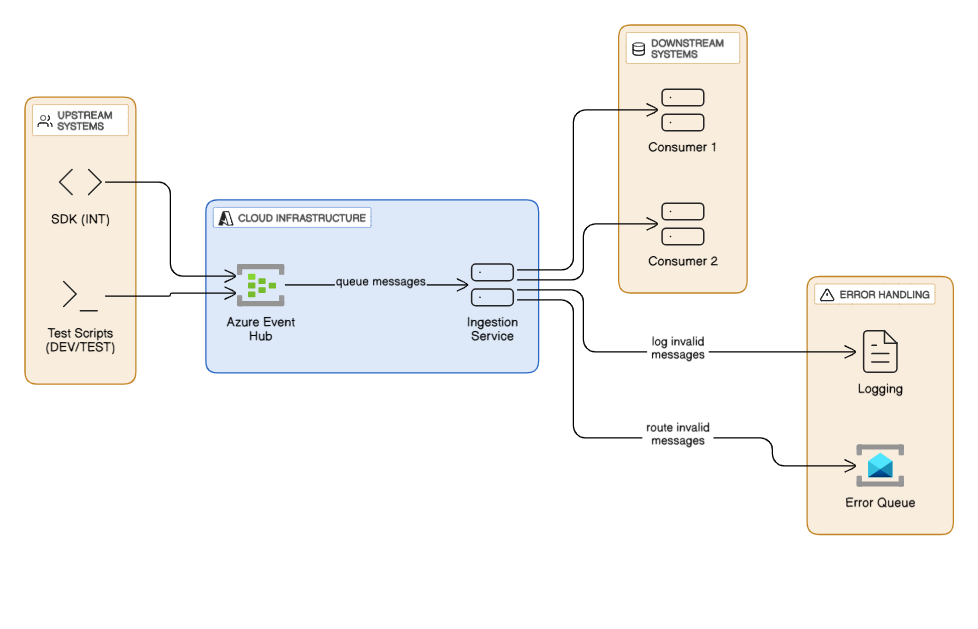

The following diagram illustrates the ingestion process:

Diagram Explanation

- Upstream System/Message Producer: In the INT environment, the upstream system sends messages via its native message generation mechanism; in DEV/TEST, test scripts using the SDK simulate this role.

- Cloud Event Hub: Queues messages, providing a scalable buffer.

- Ingestion Service: Listens to the event hub, validates messages, and routes them to downstream systems.

- Downstream Systems: Consume processed messages for further processing or storage.

- Error Handling: Invalid messages are logged or sent to an error queue.

- Cloud Infrastructure: Ensures scalability and reliability for the event hub and service.

Challenges and Lessons Learned

Testing across environments revealed several challenges:

- Schema Mismatches (INT): E2E tests in the INT environment uncovered schema discrepancies between the upstream system and the ingestion service, requiring updates to validation logic.

- Performance Bottlenecks: Automated load tests in DEV/TEST identified threading issues, resolved by optimizing the service’s concurrency model and increasing event hub partitions.

- Recoverability Gaps: Manual tests in INT showed initial delays in resuming processing after event hub outages, addressed by improving checkpointing mechanisms.

- Manual Testing Overhead: Manual testing in INT was time-intensive, highlighting the need for more automated E2E tests in future iterations.

Conclusion

Testing the ingestion service across DEV, TEST, and INT environments ensured its robustness and reliability in an event-driven architecture. Automated tests in DEV and TEST validated core functionality and performance, while manual and E2E tests in INT confirmed integration with the upstream system and real-world reliability. By combining the cloud provider’s SDK, event hub, and custom scripts, we built a comprehensive testing framework that addressed functional, performance, and integration challenges. This experience underscores the importance of multi-environment testing and a balanced mix of automated and manual strategies to deliver a production-ready ingestion service.